A large number of individuals/companies that I know of utilise Amazon Web Services (AWS).

I had historically stayed away. I had websites (both business and personal) hosted with DigitalOcean, and Linode. They worked well for me, and there was little reason to change.

A few months ago it became apparent to me that one of my companies products would require a lot of disk space. Not only that, but the given the sensitivity of the data it was important that there was an efficient way of backing it up.

Linode have recently announced the Beta testing of their block storage product, and DigitalOcean have had such an offering for a long time. Given however that I was entering new territory anyway I figured that I would give AWS a go. I ended up jumping down a rabbit hole which resulted in my trying out a lot of Amazon's products. Here are my thoughts.

Elastic Block Storage (EBS)

Amazon's EBS is their block storage offering. It allows you to create volumes of up to 16TB. It costs $0.10 per GB month, which is exactly the same as DigitalOcean and Linode.

The selling point for me was their Snapshot functionality. You can backup your volumes with one click. The backups are snapshots of the state at a given time and do not require any downtime. Simple and efficient.

Load balancing

One of my pre-emptitive thoughts was that EthTools.com has the potential to scale quickly and erratically. AWS has two main load balancing products and a number of 'Autoscaling' features. You can implement setups whereby if your servers have more than a certain load a new server is automatically spun up and added into the load balancers rotation. The small caveat is that your load balanced servers need to be EC2 instances, and as such Amazon were tempting me into moving more stuff over to them.

AWS offers interesting approachs to common server admin issues. For example you do not really need firewalls any more because AWS has the concept of Security Groups. They have essentialy pulled out complex command line based firewall admin, and put it into a pretty user interface.

Whilst these features have a learning curve, so does everything. Amazon's approach seems to be that if you put in the effort to learn about all their tooling it will pay off because their tooling is better.

To be fair, it is.

Cost

The problem is cost. Amazon know that they have a good product, and can thus charge a premium for it. Basic EC2 instances (see below) across the board are pretty expensive. They do however offer 'Reserved Instances' which offer significant discounts for longer term tie-ins. A clever psychological trick. It worked on me.

The products.

Elastic Cloud Compute (EC2)

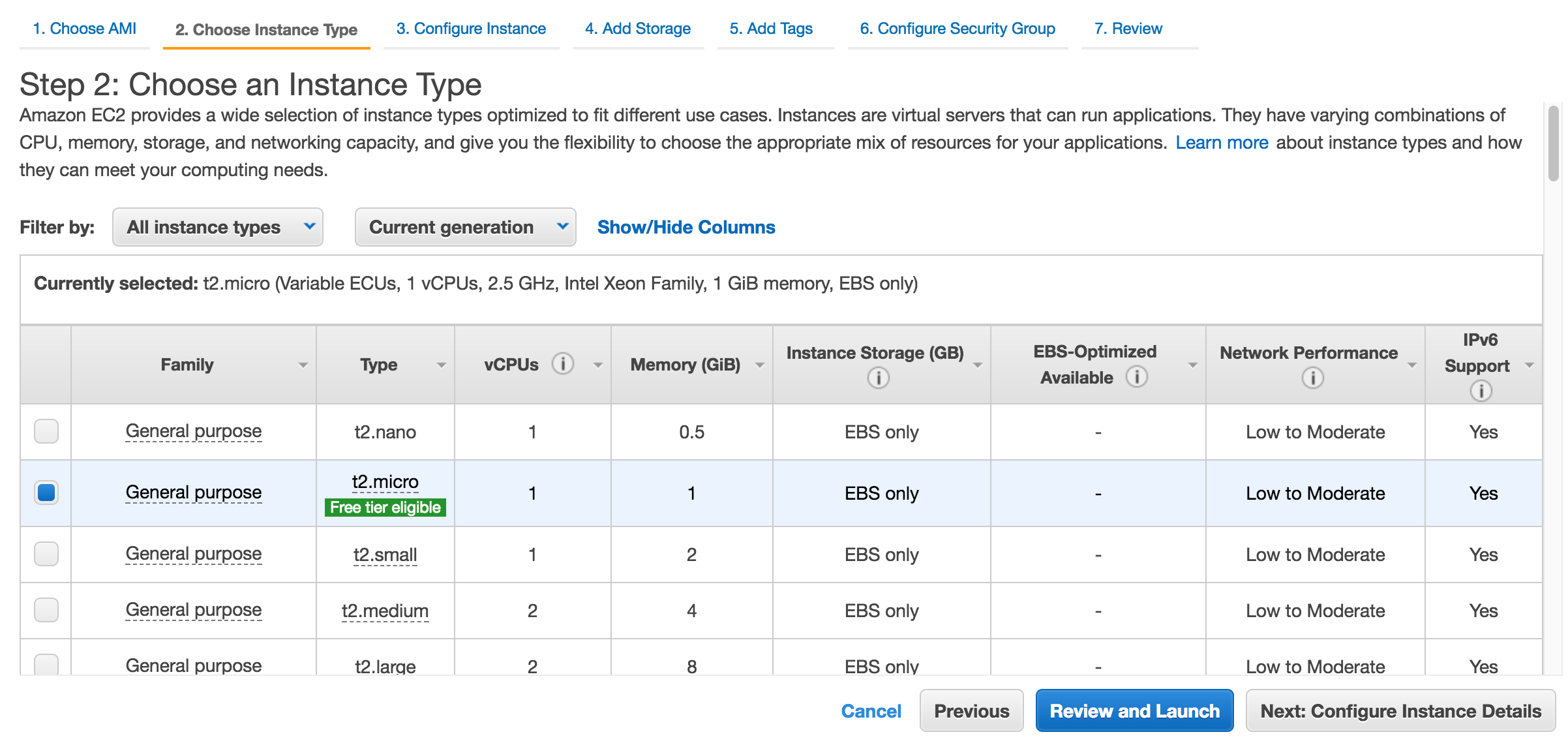

EC2 is the basic web server offering. It is really simple to set up a server instance. It is a step by step 1,2,3 process. I have not done any benchmarks (yet) but the servers seem to be fast, and I have had absolutely no issues with using them.

They offer servers in a range of categories - high CPU, high memory etc. If you have serious server requirements, AWS has you covered. They also have data centers across the world so you can set up a geographically diverse server cluster.

One of their offerings is the T2 instance type which essentially provides a base level of CPU performance which you can burst above for a certain amount of (package dependent) time. Having been tempted in by the idea of using AWS for everything, I thought that these would be my saviour. The long and the short of it was that they were not.

Running a Geth or Parity node is relatively CPU intensive (especially when initially syncing the chain). I thought that a T2 medium instance could handle this (and to be fair it can), but it became apparent that it was pushing it a little. The instances would run, but on the borderline of the CPU limits. The costs meant that to get a similar setup to what I had had using Linode previously would cost me significantly more on AWS. Was the simplicity really worth the additional cost?

Data Transfer

The nail in the coffin was the way that Amazon charges for data transfer. They subsidise their various products. Some are loss leading so as to pull you in. Then they hit you with the killer - data transfer.

Data transfer between EC2 instances is free or very cheap (if between regions), but data transfer out to the Internet is $0.09 per gigabyte. It seems like a tiny amount, but for a data intensive app it adds up quickly.

When EthTools.com is checking for a transaction confirmation it queries our nodes and gets a JSON response back. It does this multiple times per minute. If potentially thousands of people are waiting for confirmations at a given time it quickly adds up.

The price of EC2 just about worked for me, but when you add it data transfer it was a deal breaker.

Peer To Peer (P2P)

The other thing that completely slipped my mind (initially) was the Peer To Peer nature of the Ethereum blockchain. Nodes are constantly pulling in data from other nodes to sync the chain. Fortunately data transfer in is free BUT the other side of P2P is that data from your nodes is synced to other peoples nodes. This is a significant amount of outgoing data transfer all day of every day for each and every node.

I had a brief play at disabling outgoing traffic, but given that I was already on the edge I decided that AWS was not fit for this purpose.

API Gateway

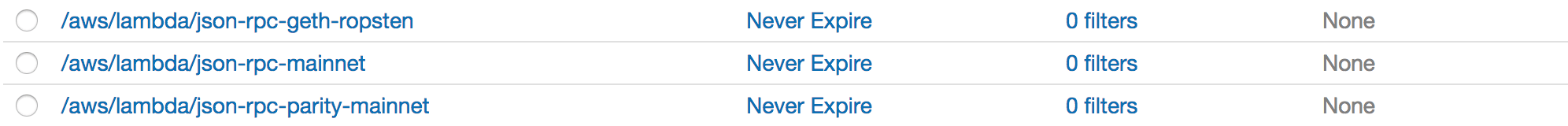

Along the way I had stumbled up the API Gateway offering. It provides easy to implement tooling for (its in the name) an API Gateway. I set this up in front of a node, and had a play.

At first I had it proxy requests to a node, and log the incoming requests to CloudWatch. You can then filter the CloudWatch logs to find all requests for eth_getBlockByNumber for example. The problem was again.. cost. Ingesting logs whilst cheap(ish), is absolutely not cheap when you have millions of daily requests. Especially if those requests are potentially returning large JSON objects of block/transaction data. Given the lack of flexibility (I suspect for simplicity) in the logging options, you can not just log the request body.

I had a further think and ended up at..

AWS Lamda

AWS Lamda allows you to run code in the cloud without a server. You upload a script (in my case I used Javascript (node)), and then you can execute it.

You pay based on the compute time. Simple.

I wrote a script to proxy requests to my backend nodes. Using Lamda allows you a lot of additional flexibility. For example you can parse the incoming request body and block (not execute) calls for heavy RPC methods, or methods with large response bodies.

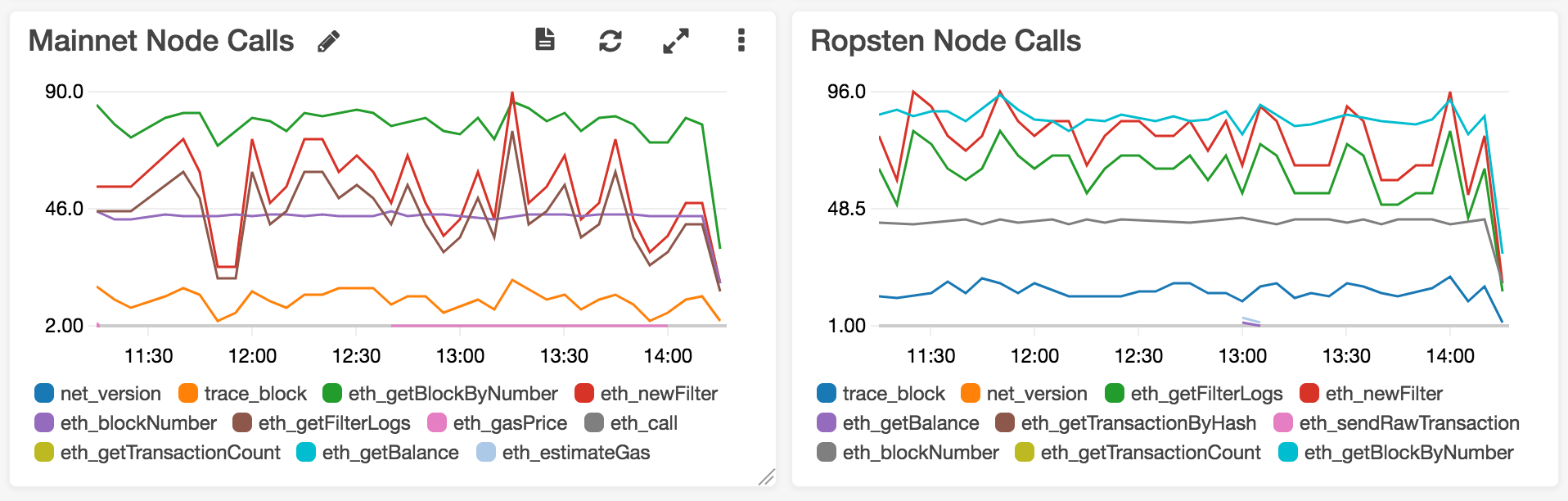

I took advantage of the environment to parse the request and log the RPC methods that were being called using CloudWatch custom metrics. AWS have a Javascript SDK so this is relatively easy.

The problem with this solution was however.. cost. Not Lamda cost, but data transfer cost. I proxied calls to the API Gateway to my backend Lamda function. The problem is that API Gateway traffic is always out to the Internet, and is charged at that $0.09/GB rate.

At this point I had noted that AWS really does have every base covered, but for my requirements it was not cost effective.

Lamda runs Node code. My Javascript was solid. Why not just run the proxy myself?

Back to Linode

Linode offer the most cost effective/simple 'micro' instances.

I spun some up, installed node, setup my 'server' and was on my way.

The AWS SDK is offered as an NPM package. As such I can still log the requests hitting my proxy to Amazon's CloudWatch. To be more cost effective each proxy has to serve a paltry 55GB of data per month. We do that in a day.

The issue was that by being back at Linode we could no longer make usage of autoscaling and load balancing offered by AWS.

The thing is though.. AWS load balancing functionality is not cost effective either. It has a base cost, and then a cost based on a compex calculation pertaining to bandwidth, connections etc. I had a play with one for a month or two, and it cost arround $60 for 30 days. That is pretty expensive for some health checks. Especially when AWS offers health checks separately..

Health checks

AWS offers a service called 'Health checks' through its Route 53 DNS tooling.

Their DNS tooling is (again) great. It is ideal if you are using AWS load balancing because it makes setting up a domain name with a load balancer super easy.

DNS has always offered round robin load balancing as an in built feature - if you defined multiple A records for the same host it just serves your request from the next one in the list.

AWS takes this a little further and allows you to setup a 'Health check' which checks the health of a server periodically. Requests will only be served by 'healthy' IPs.

They charge a little more for health checking external resources, but it is still super cost effective. I can have requests to our nodes served to verified running proxy servers at the DNS level for $0.75 per month.

Yes we still miss out on auto scaling, but for now we are learning to walk not run.

SSL

One other awesome thing about AWS is that they are now an SSL certificate authority. If you use API Gateway or their load balancers you can essentially get a free SSL certificate for any domain.

Wildcard SSL certificates cost $50 a year, so I guess if you have low outgoing data transfer using AWS could be cost effective (or neutral) just for the fact you get a free SSL certificate.

As mentioned I ended up setting up our node cluster at Linode. Given that they do not offer SSL certificates, I had to set up my own. I have done this many times now, but it is still time consuming and annoying. Free, simple to setup SSL is a great feature.

Everyone goes on about LetsEncrypt. Whilst the concept is great, it is absolutely not simple to set up. Free SSL certificates to everyone who already has the technical know how to setup a certificate seems foolish. The AWS offering is the start of something great whereby a non-technical user can setup SSL with one click.

Conclusion

So the conclusion is as follows..

AWS is amazing. I suspect that many server administrators have lost their jobs as a result of it. It allows you to setup absolutely everything you would ever need for running a product at any scale. The interfaces are simple, and everything works flawlessly.

BUT

The pricing model means that dependent on your use case it may not be very cost effective. Paying a premium for a premium product is fine, but in my case cost differences amounted to being able to run the product for 1 year vs 10 years. The tradeoff? A little bit of added complexity.

I have ended up with our web and database servers being hosted on AWS whilst our node cluster is setup on Linode. This offers the best of both worlds - cost effectiveness, simplicity, and security.